High Performance Aviation

Risk Management in General Aviation

Throughout our lives, we encounter and mitigate risk daily. Whether driving a car, walking down the street, or staying up late to watch the game, many of our everyday activities have some level of risk associated. How one manages that risk to an acceptable level is crucial in avoiding an unfavorable outcome. However, when a pilot takes control of an aircraft, whether it is a Cessna 150 or a Boeing F-18 Super Hornet, there are inherent risks. These risks escalate during departure, approach, and under high-workload situations (inclement weather, high-traffic control areas, inflight emergency, etc.) where one can become distracted or overwhelmed easily. This is where risk management and decision making become critical components pilots must utilize to ensure a safe flight.

Professionally, Risk Management in Aviation is officially referred to as Safety Management System (SMS) and is defined by the International Civil Aviation Organization (ICAO) as: “A systematic approach to managing safety, including the necessary organizational structures, accountabilities, policies, and procedures.” (ICAO, 2013) Although this system was initially adopted to aid in commercial aviation safety, it has many benefits to General Aviation as well.

SMS identifies the management of risk into three essential components:

- Hazard Identification – The identification of an unfavorable event that has the potential to lead to occurrence of a hazard which has the potential to cause harm. Hazard identification should use both reactive and proactive measures.

- Risk Assessment – Hazards are ranked by the seriousness of their negative effect and ranked in order of their risk-bearing potential.

- Risk mitigation – If the risk is determined to be unacceptable, then measures must be taken to reinforce and increase the level of defenses against that risk, or to entirely avoid or remove the risk.

(Skylibrary.com, 2017)

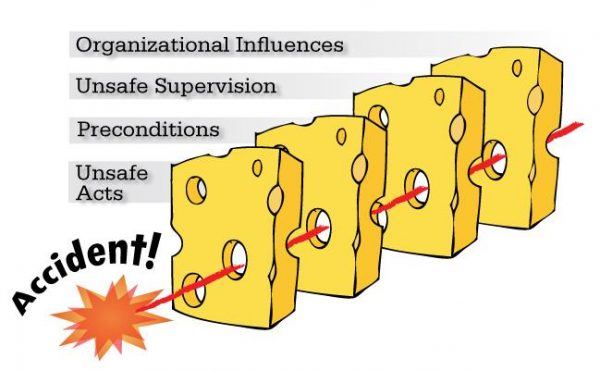

The Swiss Cheese Model

Professor Dr. James Reason created an accident model referred to as the “Swiss Cheese Model” for accident causation. In this model, the separate layers (slices of cheese) represent the multiple layers of system defenses (Training, Maintenance, Automation, etc.). The holes within these defenses represent single-point failures (latent and/or active failures), which alone may be inconsequential. However, if there are multiple single-point failures within each layer, the holes from these failures may align and lead to an accident or incident.

It is important to note that no matter how much precaution or planning has been performed, error is inevitable when humans, are involved, and cannot be completely eliminated through means of technology, training, procedures, or adherence to regulations. Therefore, it is crucial to identify, report, and analyze errors as well as develop strategies to control and eliminate errors (safety strategies). Errors are categorized as slips and lapses (failure in execution or intended action) and mistakes (failure in plan-of-action). Slips and lapses can be described as the failure to deploy flaps or following a pre-flight checklist, while mistakes can be described as a failure to obtain a weather briefing.

Aeronautical Decision Making: Perceive, Process and Perform

Another critical component to risk management is Aeronautical Decision Making (ADM). ADM refers to mental process used by humans to determine a best course of action despite the given circumstances. The Federal Aviation Administration (FAA) uses a three-part approach to ADM in which pilots should:

- Perceive the given set of circumstances for your flight

- Process by evaluating their impact on flight safety

- Perform by implementing the best course of action

The human brain is limited on the incoming information it can process at a given time. Therefore, our brains must constantly (unconsciously) filter incoming information and retain only certain parts that it deems important. When the brain becomes overloaded, incoming information is compensated by filling in the gaps which typically involves the storage and usage of inaccurate information. The brain also tends to rely on previous patterns and expectations which may sometimes be beneficial, although it can also be potentially dangerous and cause one to overlook a critical step or action.

Confirmation Bias & Get-there-itis

Humans are also susceptible to a process referred to as confirmation bias which causes the brain to seek out information to support a decision already made. This process may also lead to a potential hazard. Lastly, when evaluating options for a certain decision, it is necessary to be mindful of how alternatives are framed. For example, if a decision is made to continue through inclement weather, and it is framed in positive terms (I can arrive on-time and get home as planned rather than divert), that person is more likely to decide to continue on to the airport despite the danger; while framing in negative terms may make it more likely that one will divert to their alternate airport.

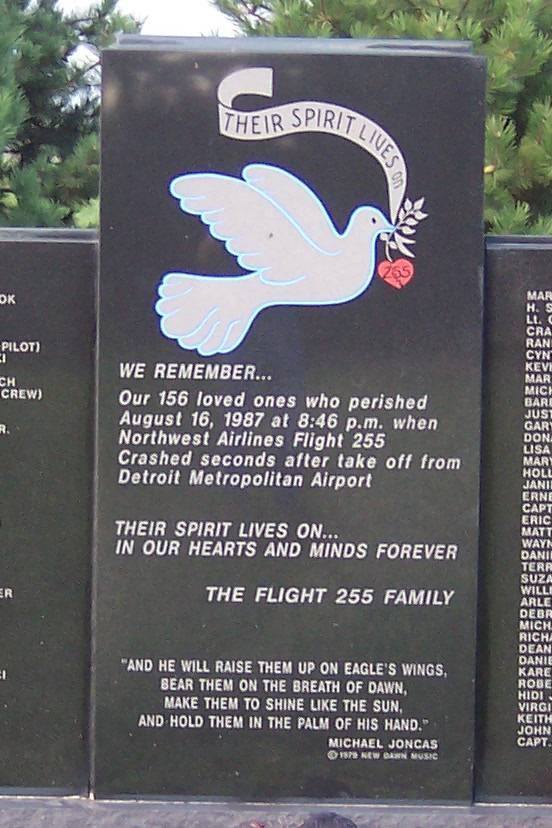

Throughout the history of aviation, accidents involving failures to identify errors and the mitigation of risk have claimed many lives. An example of this is Northwest Airlines Flight 255. On August 16, 1987, a McDonnell Douglas DC-G-82 began its departure on runway 3C at Detroit Metropolitan Wayne County Airport. According to flight data recorders, the initial takeoff roll was uneventful until the captain called out command for the first officer to “Rotate.” As the aircraft struggled to become airborne, the stick-shaker engaged as well as an aural tone and voice warnings initiated through the Supplemental Stall Recognition System (SSRS) indicating that the aircraft was in an aerodynamic stall. These systems continued to alert the crew of the stall until the end of the recordings as the pilots struggled to keep the aircraft airborne.

Witnesses reported that the aircraft seemed to take longer than normal to become airborne, noting that when the aircraft did become airborne, the aircraft appeared to be at a higher than normal pitch attitude and the tail appeared close to striking the runway surface. After the aircraft left the runway surface, the aircraft rolled from right to left before the left wing impacted a light pole in a car rental lot, ripping a 4-5 foot section from the left wingtip. The aircraft continued rolling back and forth after the initial impact until the aircraft entered a 90-degree leftwing low attitude just prior to striking a rooftop as it continued a counter-clockwise roll before finally impacting a roadway outside of the airport boundary. The aircraft continued skidding along that roadway before striking a railroad embankment as it disintegrated and erupted into flames. In total, 148 passengers and six crewmembers were killed as well as two persons on the ground.

Upon investigation by the National Transportation Safety Board (NTSB), it was determined that the crew failed to deploy the flaps and slats (fully retracted) to the required position for departure, causing inadequate lift that was responsible for the longer-than-normal takeoff roll, and the ensuing aerodynamic stall which caused a loss of control situation. In the accident investigation report, the NTSB concluded that the probable cause for the accident of Flight 255 was “the flighcrew’s failure to use the taxi checklist to ensure that the flaps and slats were extended for takeoff. A contributing factor was the absence of electrical power to the airplane takeoff warning system which did not warn the flightcrew that the aircraft was not properly configured for takeoff.” (NTSB, 1988)

When preparing for flight, many considerations must be made to ensure safety. Even an inconsequential factor has the potential to cause harm and affect the overall safety of a flight. Something as small as a lack of sleep, the ingestion of an over-the-counter medication, or domestic stressors may negatively affect a pilot’s ability to safety operate an aircraft. Understanding our own human limitations, as well as the limitations of the aircraft and its systems can aid in the creation of a personal and/or organizational safety culture, as well as aid in the mitigation of risk and hazards. The application of SMS in civil, commercial, and military aviation has proven effective and has great potential in reducing overall accidents/incidents in General Aviation.

For more information on Safety Management System, or Risk Management in Aviation, or Aeronautical Decision Making, please visit:

FAA Safety Management Systems for Aviation Service Providers

ICAO Global Aviation Safety Plan (GASP)

Scott Kellam is a private pilot with a bachelor’s and master’s degree in Aeronautics from Embry-Riddle Aeronautical University. His interest in human factors has guided his studies in aviation.

Would you like more information?

Send us a message below.